TL;DR LayerFusion introduces a harmonized text-to-image generation framework that dynamically generates foreground (RGBA), background (RGB),

and blended images simultaneously, enabling seamless layer interactions without additional training.

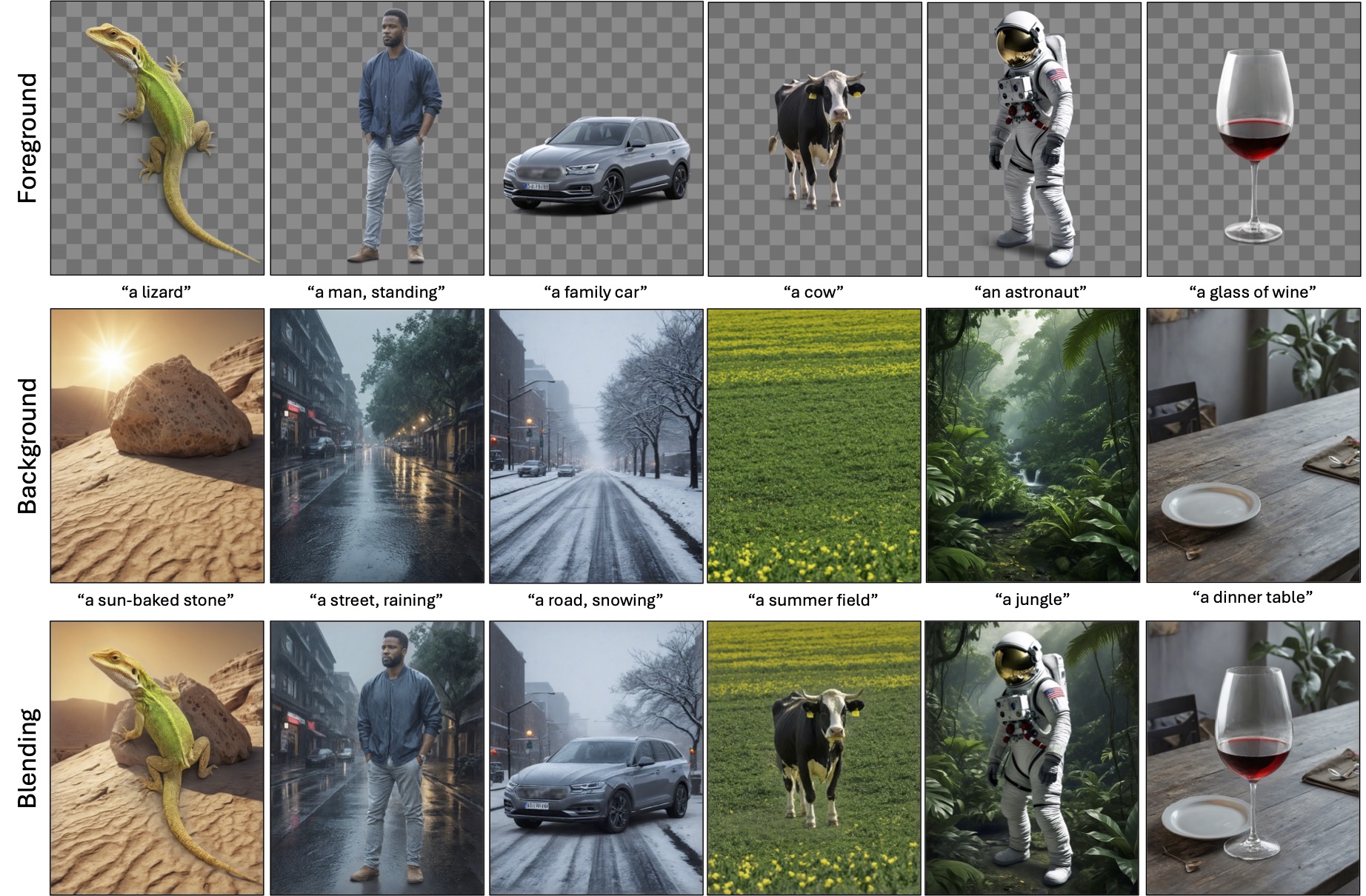

We propose a framework for generating a foreground (RGBA), background (RGB) and blended (RGB) image simultaneously from an input text prompt. By introducing an optimization-free blending approach that targets the attention layers, we introduce an interaction mechanism between the image layers (i.e., foreground and background) to achieve harmonization during blending. Furthermore, as our framework benefits from the layered representations, it enables performing spatial editing with the generated image layers in a straight-forward manner.

Abstract

Large-scale diffusion models have achieved remarkable success in generating high-quality images from textual descriptions, gaining popularity across various applications. However, the generation of layered content, such as transparent images with foreground and background layers, remains an under-explored area. Layered content generation is crucial for creative workflows in fields like graphic design, animation, and digital art, where layer-based approaches are fundamental for flexible editing and composition. In this paper, we propose a novel image generation pipeline based on Latent Diffusion Models (LDMs) that generates images with two layers: a foreground layer (RGBA) with transparency information and a background layer (RGB). Unlike existing methods that generate these layers sequentially, our approach introduces a harmonized generation mechanism that enables dynamic interactions between the layers for more coherent outputs. We demonstrate the effectiveness of our method through extensive qualitative and quantitative experiments, showing significant improvements in visual coherence, image quality, and layer consistency compared to baseline methods.

Method

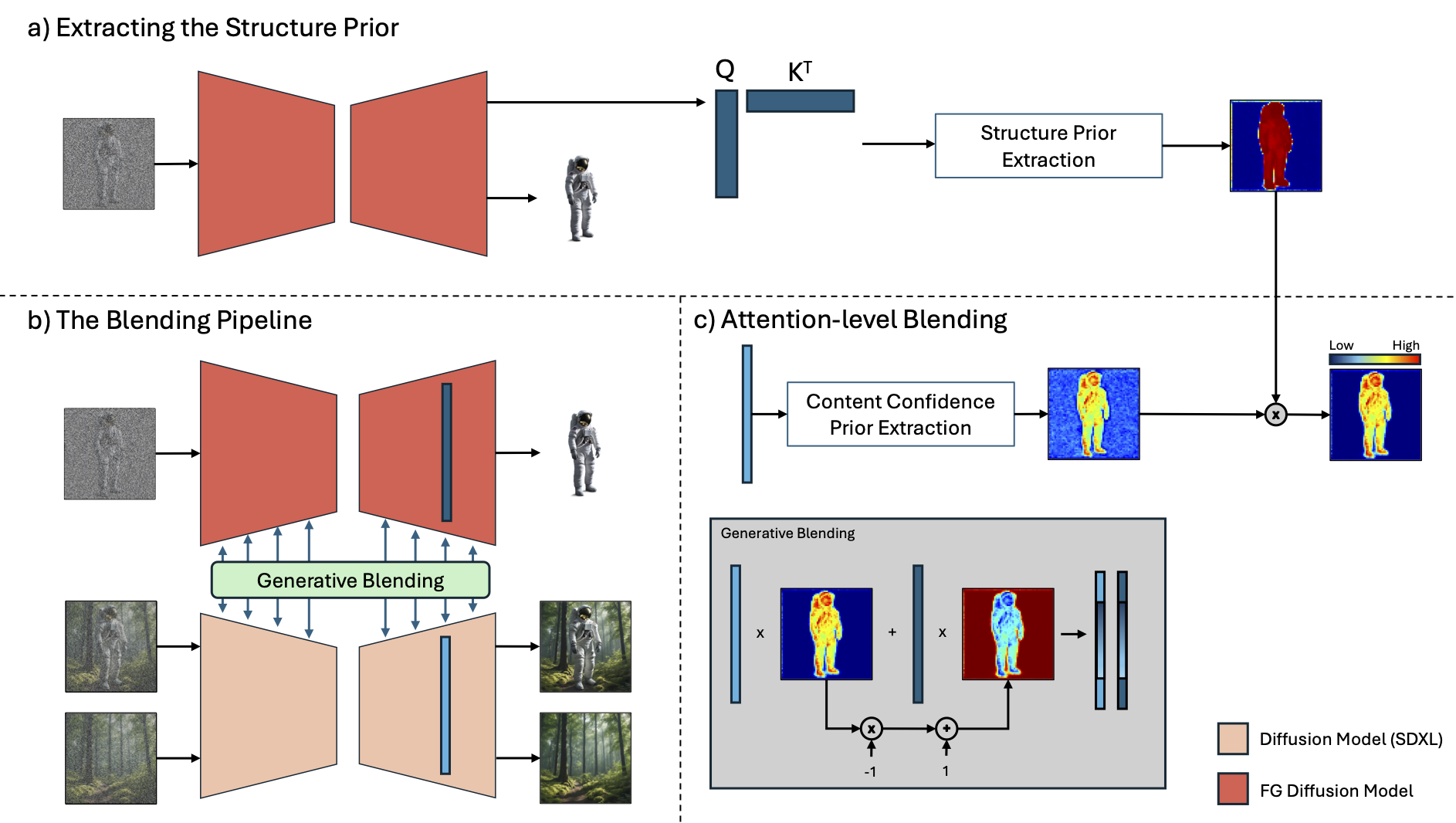

By making use of the generative priors extracted from transparent generation model ϵθ, FG, LayerFusion is able to generate image triplets consisting a foreground (RGBA), a background, and a blended image. Our framework involves three fundamental components that are connected with each other. First we introduce a prior pass on ϵθ, FG (a) for extracting the structure prior, and then introduce an attention-level interaction between two denoising networks (ϵθ, FG and ϵθ) (b), with an attention level blending scheme with layer-wise content confidence prior, combined with the structure prior (c).

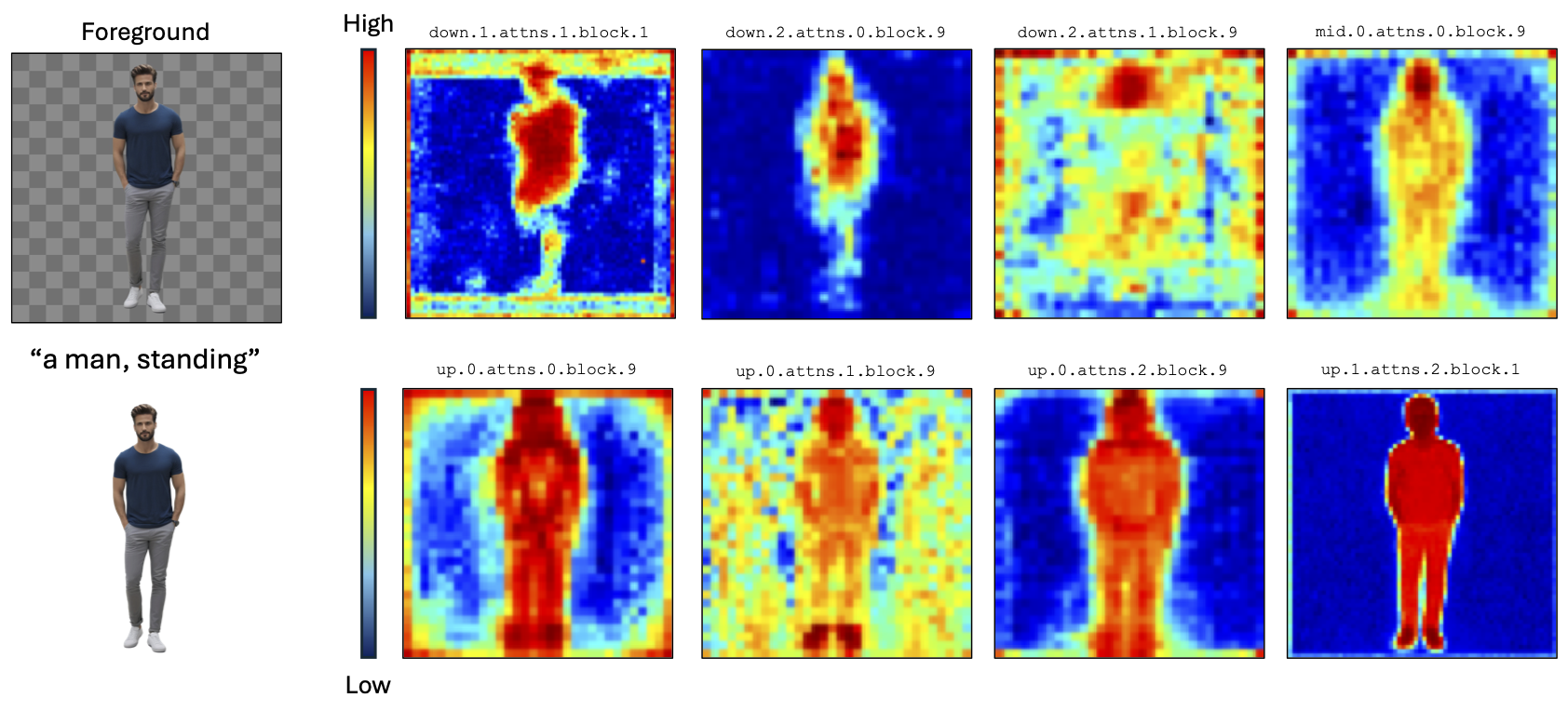

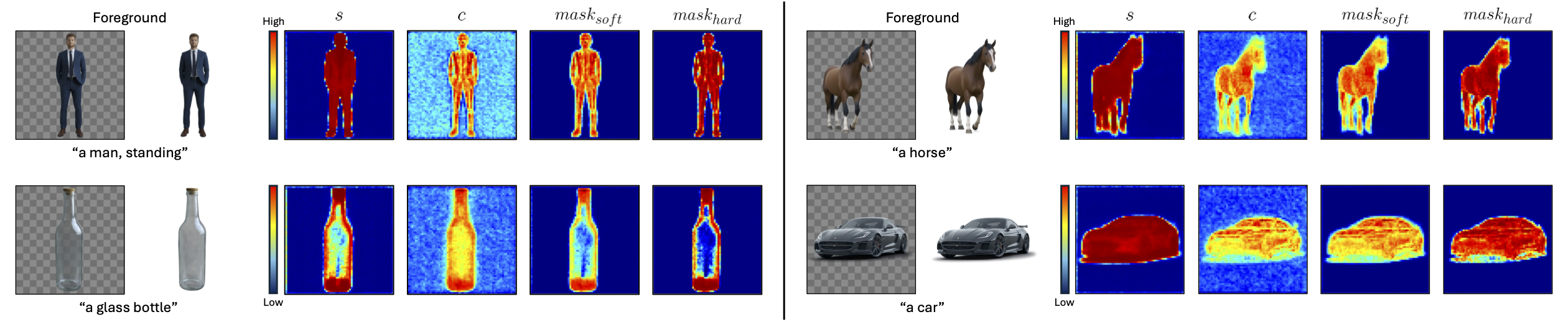

Masks as Generative Priors

As we observe through consecutive layers of foreground diffusion model ϵθ, FG, towards final layers, attention layers highlight the object structure

By combining the structure and content confidence priors, LayerFusion can enable harmonization across layers, where the composite masks can encode vital information such as transparency.

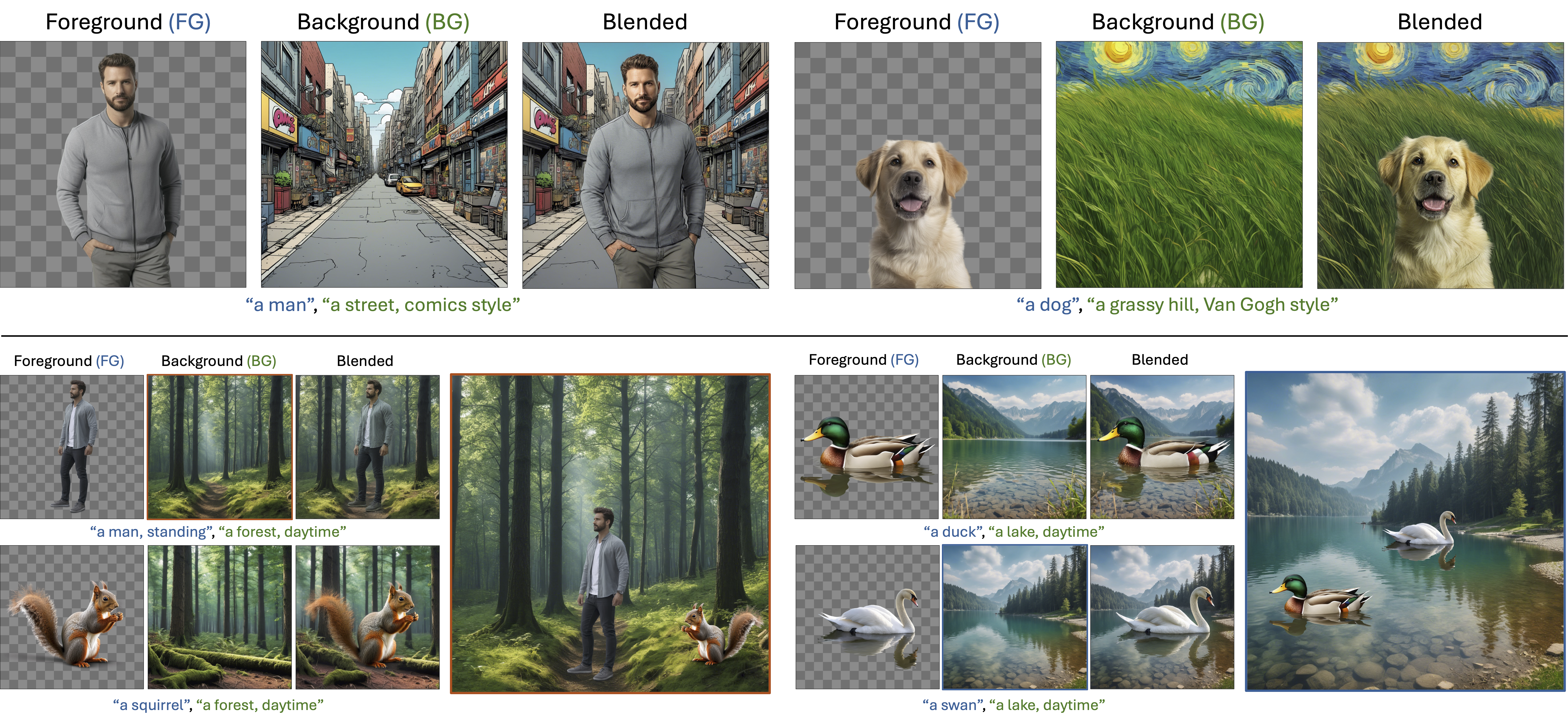

Generation Results

Generations on Different Visual Concepts

LayerFusion can generate image triplets with RGBA foreground, RGB blending and background with different visual concepts.

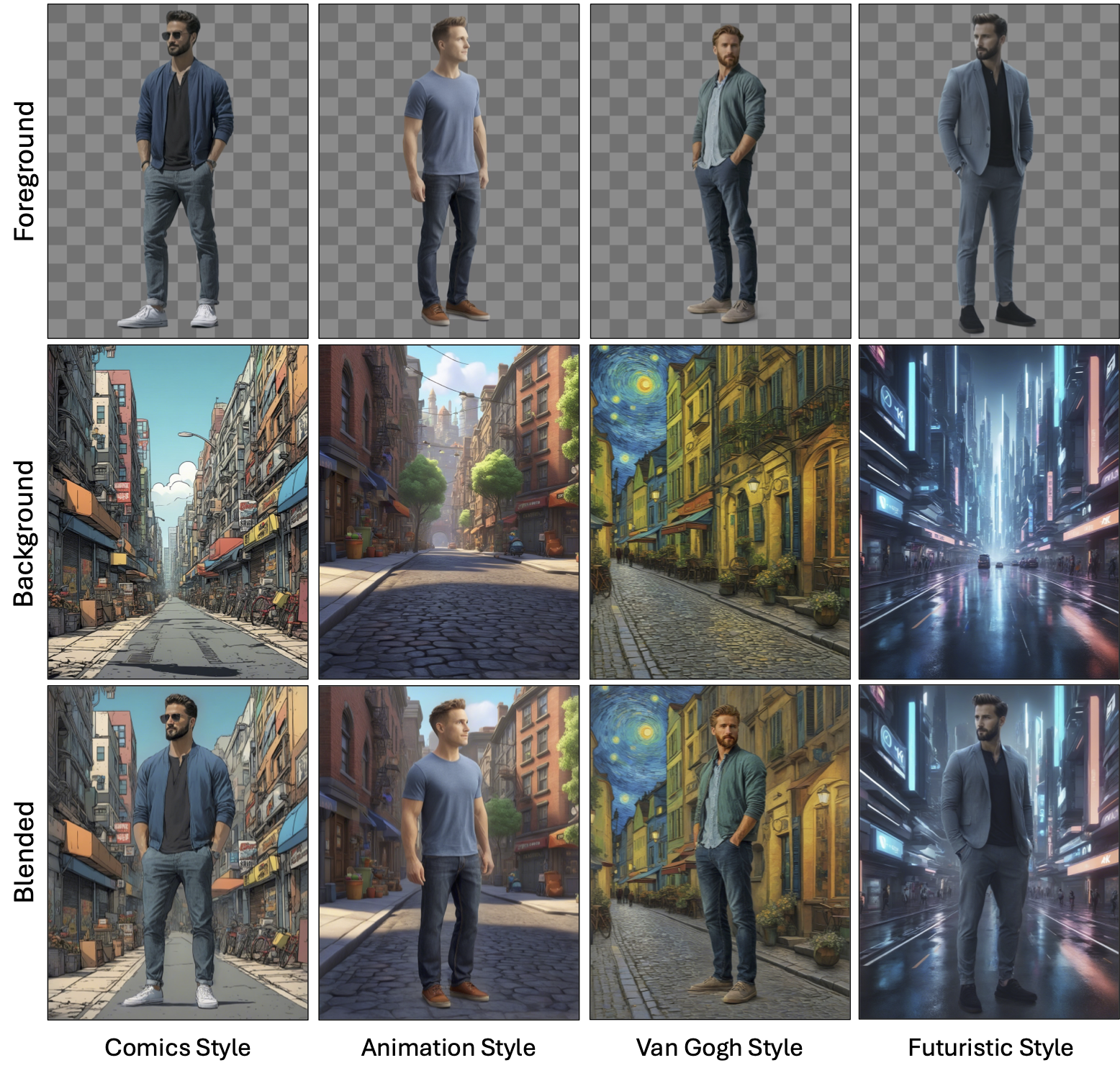

Generations with Stylization Prompts

LayerFusion can harmonize foreground and background layers by staying faithful to styling conditions.

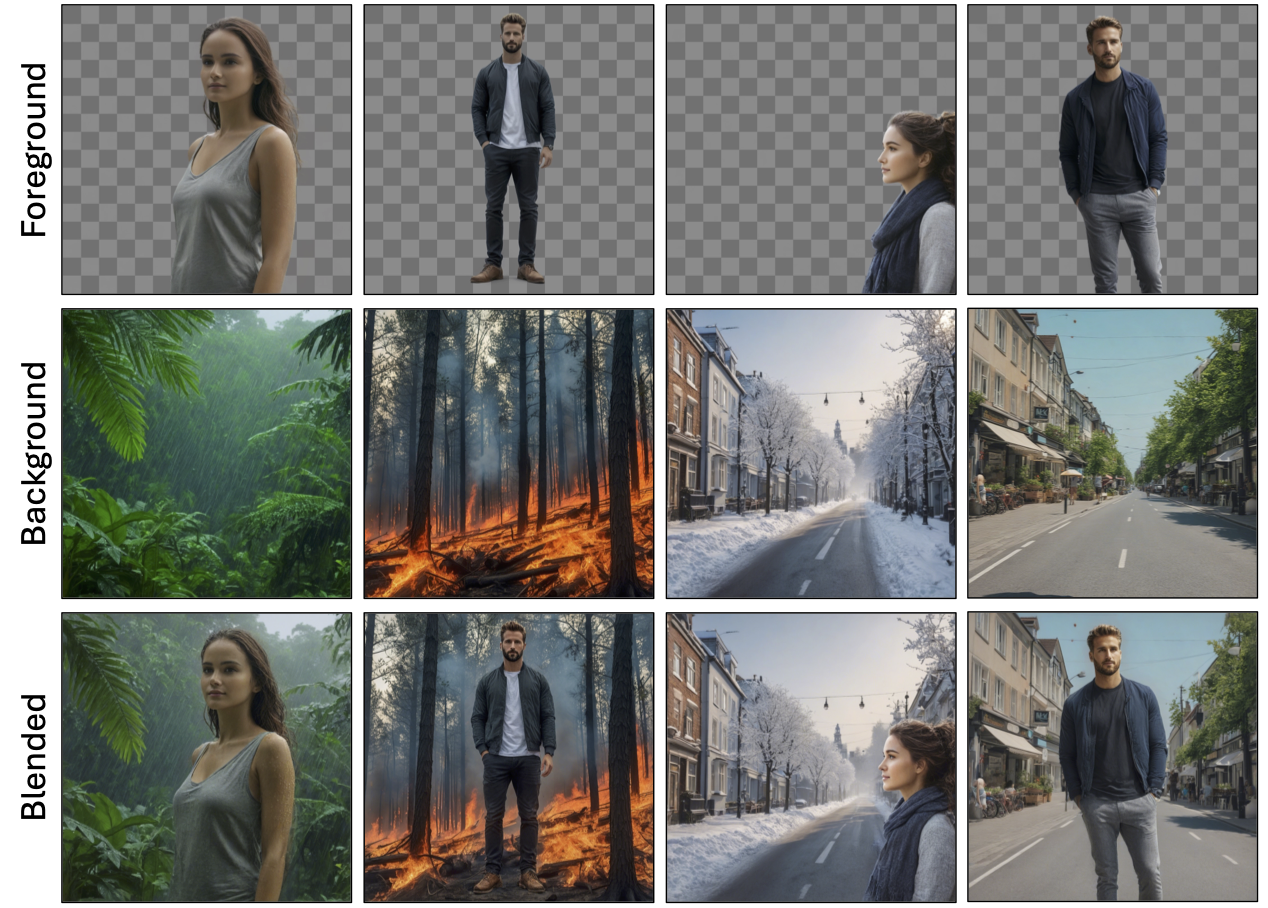

Generations on Different Background Conditions

With the information shared between foreground and background layers LayerFusion can modify the appearance of each layer so that they will be compatible with each other.

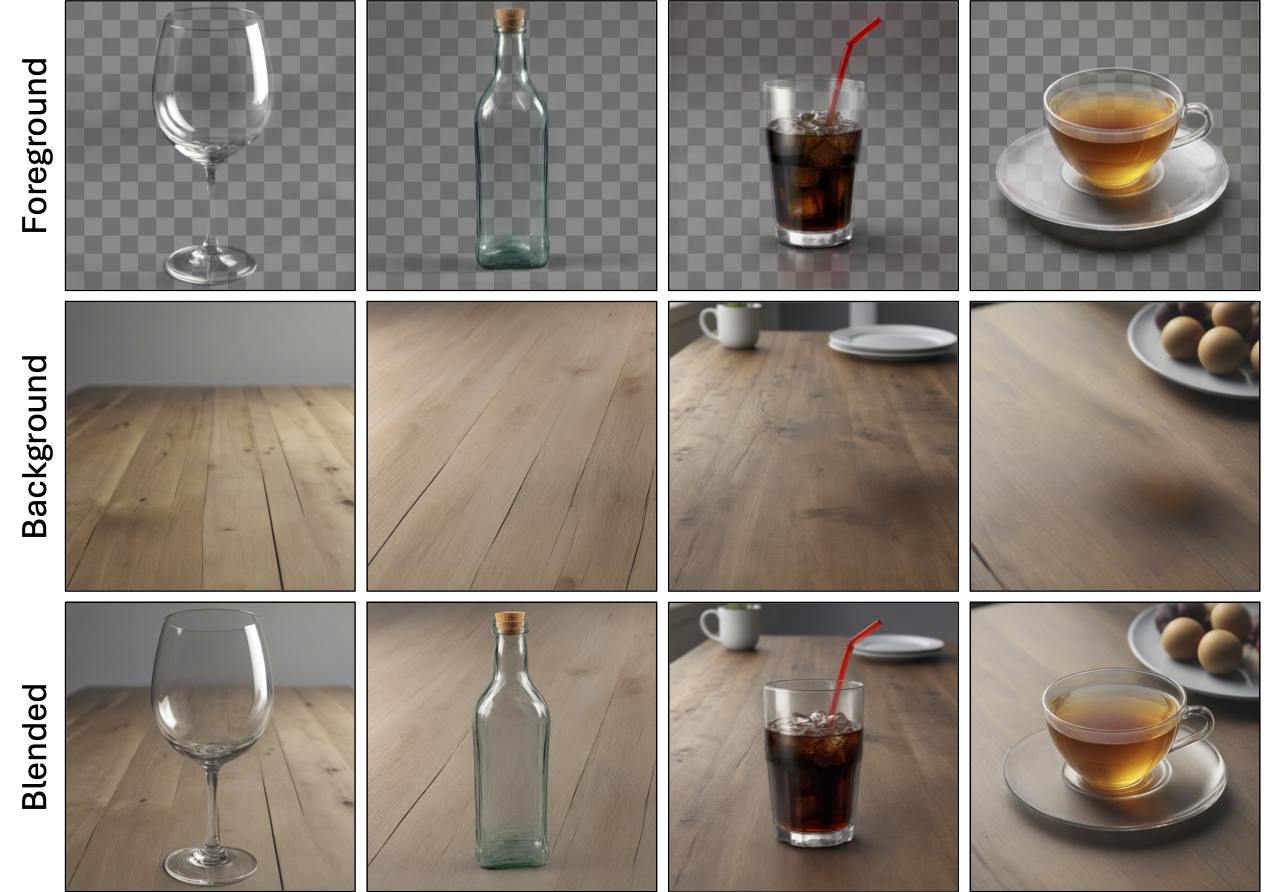

Generations with Transparent Foregrounds

During blending LayerFusion preserves the transparency of the foreground objects.

BibTeX

@misc{dalva2024layerfusion,

title={LayerFusion: Harmonized Multi-Layer Text-to-Image Generation with Generative Priors},

author={Yusuf Dalva and Yijun Li and Qing Liu and Nanxuan Zhao and Jianming Zhang and Zhe Lin and Pinar Yanardag},

year={2024},

eprint={2412.04460},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2412.04460},

}